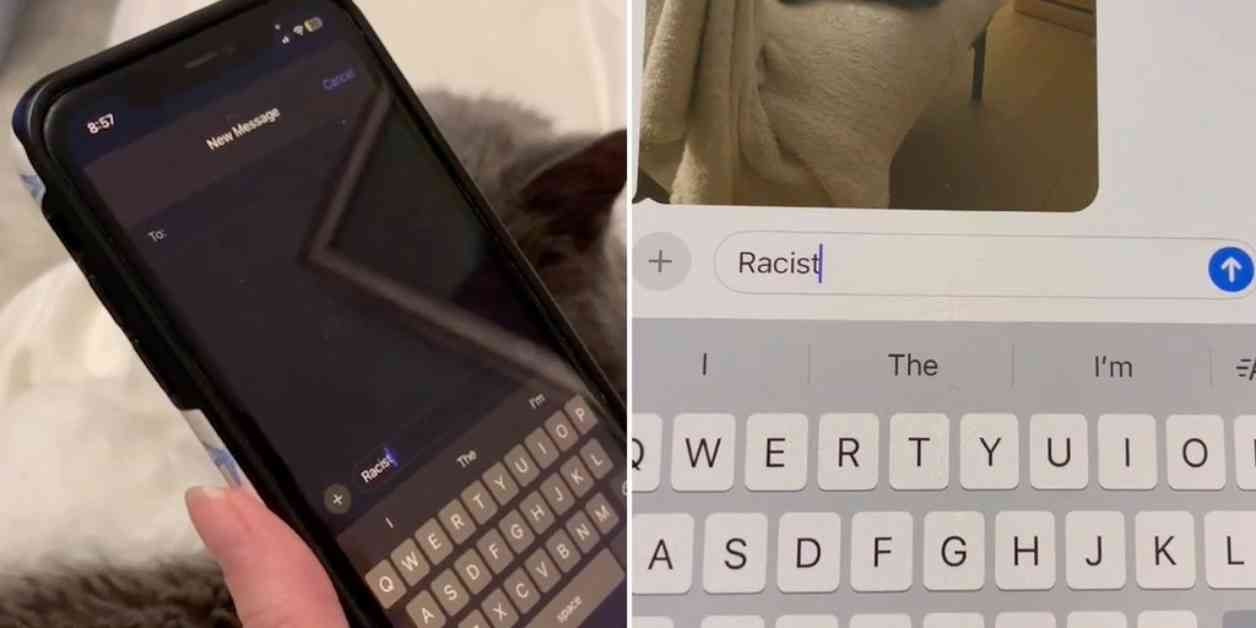

Apple’s iPhone voice-to-text feature has recently stirred up controversy due to a peculiar glitch that emerged in a viral TikTok video. The video showcased a user uttering the word “racist,” only for it to be transcribed as “Trump” before quickly reverting back to the intended word. This unexpected hiccup caught the attention of many, including Fox News Digital, who successfully replicated the issue multiple times.

The voice-to-text dictation feature exhibited a curious behavior where it would sporadically display “Trump” instead of “racist” when prompted, yet it was not a consistent occurrence. Users also reported instances where words like “reinhold” and “you” appeared instead of “racist,” although the feature generally transcribed the word accurately.

Apple’s Response and Explanation

In response to this glitch, an Apple spokesperson reassured the public that the company is actively working to resolve the issue. The spokesperson acknowledged that there was indeed a problem with the speech recognition model powering Dictation and emphasized that a fix is being implemented promptly. Apple clarified that the speech recognition models governing dictation may occasionally exhibit words with some phonetic overlap before arriving at the correct transcription. The company further explained that the bug specifically affects words with an “r” consonant during dictation.

This incident is not the first time technology has found itself under scrutiny for political miscues. A notable example includes a viral video from September involving the Amazon Alexa virtual assistant, where it offered reasons to vote for then-Vice President Kamala Harris but refused to do the same for President Donald Trump. Amazon later disclosed to the House Judiciary Committee that Alexa relies on pre-programmed manual overrides created by the company’s information team to navigate certain user queries.

Amazon’s Response and Resolution

Upon becoming aware of the issue with Alexa’s pro-Harris responses, Amazon swiftly took action to rectify the situation. Within one hour of the video’s circulation and subsequent virality, the company introduced a manual override for questions related to Harris. This adjustment was made within two hours of the video’s posting, showcasing Amazon’s commitment to addressing concerns promptly.

During the briefing with the House Judiciary Committee, Amazon expressed regret over Alexa’s unintended display of political bias and emphasized its policy of neutrality regarding party affiliations or candidate preferences. The company acknowledged falling short of this standard in the aforementioned incident and conducted a thorough audit of its systems. As a result, manual overrides were implemented for all candidates and various election-related prompts, marking a significant enhancement to Alexa’s capabilities.

In conclusion, these incidents involving Apple’s voice-to-text feature and Amazon’s Alexa underscore the intricacies of technology in navigating language and political sensitivities. While glitches may occur, the swift responses from these tech giants demonstrate a commitment to rectifying issues and upholding standards of impartiality and accuracy in their products.

This report was contributed by Fox Business’ Eric Revell, Hillary Vaugh, and Chase Williams, with additional insights provided by breaking news reporter Greg Wehner from Fox News Digital. For story tips or suggestions, reach out to Greg.Wehner@Fox.com or connect on Twitter @GregWehner.